Table of Contents

Introduction

On Tuesday (May 14), at its annual Google I/O developer conference in California, Google announced a bunch of updates. During the two-hour presentation, CEO Sundar Pichai shared the company’s plans, most of which included dabbling in AI.

It was AI all day at Google’s developer keynote. The company showed off new AI-powered chatbot tools, new search capabilities, and a bunch of machine intelligence upgrades for Android.

At Google I/O 2024, the annual developer conference, Google shared how they built more helpful products and features with AI — including improvements across Search, Workspace, Photos, Android, and more. Read on for everything they announced.

Gemini Unleashed: Innovation and Expansion in 2024

Gemini Nano, Google’s on-device mobile large language model, is receiving an upgrade. It will now be known as Gemini Nano with Multimodality, a change announced by Google CEO Sundar Pichai, who mentioned that it can “transform any input into any output.”

Gemini’s Latest Technological Advances

- Gemini can gather information from text, images, audio, the web, or social videos, as well as live video from your phone’s camera, and then process that input to summarize its content or answer any related questions.

- Google presented a video illustrating this capability, in which someone used a camera to scan all the books on a shelf and recorded their titles in a database for later recognition.

- Gemini goes beyond just answering questions and summarizing data — Google intends for it to also carry out tasks for you.

- Agents is a new AI assistant that allows you to delegate tasks to it. Google showcased its capabilities by photographing a pair of shoes and instructing Agents to return them.

- The AI used image recognition to identify the shoes, searched Gmail for the receipt, and offered to initiate a return via email. It could also organize vacations, business trips, and other information-related tasks.

Introducing the Latest Gemini Models

Gemini is receiving an important update that will allow it to analyze longer documents, audio recordings, video recordings, codebases, and more, thanks to the world’s longest context window.

- Google has introduced two new models of its Gemini AI, each focusing on different types of tasks.

- The faster, lower latency Gemini 1.5 Flash is optimized for tasks where quickness is preferred.

- Gemini Advanced now can handle up to 1 million tokens at once, outperforming Meta’s Claude 3, which can handle 300K tokens, and GPT-4, which can handle 128K.

Gemini Photos: Revolutionizing Your Photo Search Experience

Users are uploading over 6 billion photos to Google Photos every day, so it’s not surprising that some extra help is needed to sort through them all.

- Gemini, which is set to be integrated into Google Photos this summer, will provide additional search capabilities through the Ask Photos function.

- Google has incorporated powerful visual search tools into Google Photos. Through a new feature called Ask Photos, you can request Gemini to search your photos and provide more detailed results than before.

- For example, if you ask “What’s my license plate again,” it will scan through your photos to find the most probable answer, saving you the effort of manually searching through your photos.

According to a blog post by Google, Jerem Selier, a software engineer at Google Photos, mentioned that the feature does not gather data from your photos that can be used for advertising purposes or to train other Gemini AI models (apart from what is used in Google Photos).

Gemini and Gmail

- Google aims to streamline communication by merging Gemini with Gmail. Within Gmail, you can utilize the AI tool to compose, search, and condense emails.

- Additionally, it will have the capability to manage more intricate tasks.

- For instance, if you need to initiate a return for an online purchase, it can assist in locating the receipt and completing the return form if necessary.

Gemini and Google Maps

- Google has chosen to integrate Gemini into Google Maps, beginning with the Places API.

- Developers will now be able to display a brief overview of locations within their applications and websites, thereby reducing the time they would otherwise spend crafting unique descriptions.

A closer look at Project Astra

Project Astra is a next-level version of Google Lens, functioning as a visual chatbot. Users can utilize their phone cameras to ask questions about their surroundings simply by pointing the camera at objects.

- Google demonstrated this through a video where someone asked Astra a series of questions based on what was around them.

- Astra boasts improved spatial and contextual understanding, allowing users to identify things in the world, such as their location, the details of code on a computer screen, or even brainstorm a creative band name for a pet.

- The demonstration showcased Astra’s voice-activated interactions through a phone’s camera and a camera integrated into unspecified smart glasses.

Google’s Generative AI: The Future of Search is Here

Over the past 25 years, Google Search has continuously evolved through technological shifts, enhancing its core systems to deliver high-quality, reliable information. With billions of facts about various subjects, Google provides immediate and trustworthy information.

- The introduction of generative AI into Google Search now allows for more complex tasks like research, planning, and brainstorming without extensive user legwork.

- One of the innovations, AI Overviews, has been tested in Search Labs and is now rolling out to users in the U.S., providing quick summaries and relevant links, increasing user satisfaction and website traffic.

- Soon, users will be able to adjust the complexity of AI Overviews to better suit their understanding or to simplify information further.

AI Overviews will soon include multi-step reasoning, allowing users to ask complex questions in a single query. Planning features are also being introduced, enabling users to create detailed plans directly from their search queries, with customizable options.

- Additionally, a new AI-organized results page will categorize search results under AI-generated headlines to enhance discovery and inspiration.

- Google is also expanding its video understanding capabilities, allowing users to conduct searches using video inputs, simplifying the process when words are hard to find.

- This feature will soon be available in Search Labs in the U.S., with plans to expand to other regions.

Overall, these advancements are set to transform how Google Search operates, making it a more powerful tool for users worldwide.

Impact of Generative AI on Curiosity and Knowledge with LearnLM

Google introduced an upgrade for Notebook LM by integrating the Gemini model, enhancing its capabilities in personalizing educational content, as demonstrated with a customized physics lesson using basketball examples.

- This advancement is part of a broader initiative where Google collaborates with educators and learning experts to incorporate learning science principles into its AI models and products.

- These principles include inspiring active learning, managing cognitive load, adapting to the learner’s needs, stimulating curiosity, and deepening metacognition.

- Google’s LearnLM is set to improve educational experiences across various platforms such as Google Search, YouTube, and interaction with Gemini.

- Upcoming features include the ability to adjust AI-generated overviews in Google Search for simpler or more detailed explanations, solve complex math and physics problems directly on Android devices,

- Additionally, a new tool on YouTube will allow users to engage with academic content interactively, asking questions or taking quizzes directly within videos.

These innovations aim to support deeper understanding and active engagement in learning rather than merely providing answers.

The New VideoFX: Expanding Features in ImageFX and MusicFX

Generative media is revolutionizing creative expression, allowing people to transform their ideas into various media forms, including text, music, images, and now video.

- Google introduced VideoFX, an experimental tool powered by Veo from Google DeepMind, designed to aid creatives in video storytelling.

- This tool allows for emotional nuance and cinematic effects in video creation and includes a Storyboard mode for detailed scene iteration.

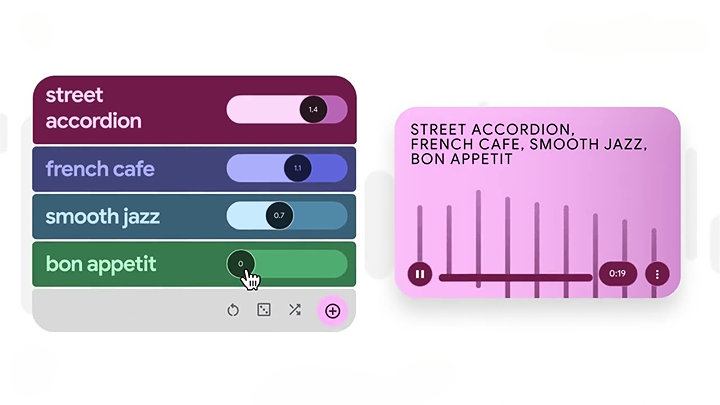

- Updates to ImageFX and MusicFX were also announced. ImageFX has introduced editing controls and a new model, Imagen 3, enhancing photorealism and image detail.

- MusicFX now features a DJ Mode, enabling users to craft and mix beats innovatively. These tools are expanding globally, now available in 110 countries and 37 languages.

Google is dedicated to advancing these tools responsibly, working alongside creators to understand their needs and integrating feedback into development. All content produced with these tools is marked with a digital watermark called SynthID to ensure responsible use.

Introducing PaliGemma and Gemma 2

PaliGemma

- PaliGemma is a powerful open VLM designed for fine-tuning performance on vision-language tasks.

- This includes image and short video captioning, visual question answering, understanding text in images, object detection, and object segmentation.

- Pretrained and fine-tuned checkpoints are available, as well as resources for open exploration and research.

- PaliGemma is available on GitHub, Hugging Face models, Kaggle, Vertex AI Model Garden, and ai.nvidia.com.

Gemma 2

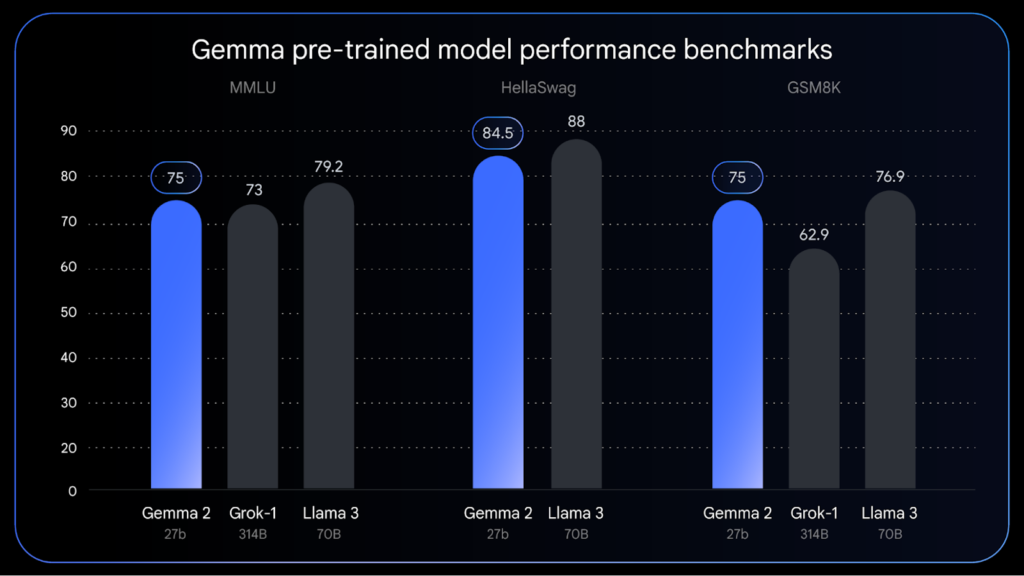

Gemma 2 will be available in new sizes for a broad range of AI developer use cases and features a brand-new architecture designed for breakthrough performance and efficiency. Key benefits include:

- Class Leading Performance: Gemma 2 delivers performance comparable to Llama 3 70B at less than half the size, setting a new standard in the open model landscape.

- Reduced Deployment Costs: Gemma 2’s efficient design allows it to fit on less than half the compute of comparable models, making deployment more accessible and cost-effective.

- Versatile Tuning Toolchains: Gemma 2 will provide developers with robust tuning capabilities across a diverse ecosystem of platforms and tools, making fine-tuning easier than ever.

Safety and Security

A new feature showcased in the keynote was a scam detection feature for Android, which is capable of monitoring phone calls to identify potential scam language, such as requests to transfer money to a different account.

- If suspicious activity is detected, the feature will intervene and display a prompt advising the user to end the call.

- Google assures that this feature operates locally on the device, ensuring that phone calls are not sent to the cloud for analysis, thus enhancing privacy.

- Additionally, Google has enhanced its SynthID watermarking tool, designed to differentiate AI-generated content.

- This tool can aid in identifying misinformation, deepfakes, and phishing attempts by embedding an invisible watermark within the media, which can be detected through pixel-level analysis by specialized software.

The recent updates have extended the functionality of SynthID to scan content on the Gemini app, on the web, and in Veo-generated videos. Google has announced its intention to release SynthID as an open-source tool later this summer.

Conclusion

Google I/O 2024 demonstrated Google’s continued commitment to innovation and technological advancement. The event showcased a range of exciting updates, from groundbreaking AI enhancements to significant Android OS upgrades and impressive new hardware.

These developments not only promise to enhance user experience but also signal Google’s dedication to ethical technology and sustainability. As the tech giant forges ahead, its influence on the industry and everyday digital interactions remains profound, setting the stage for a future where technology continues to be intertwined with every facet of life.

Faisal Rafeeq is an SEO, PPC, and Digital Marketing expert. Faisal has worked on multiple e-commerce and web development projects, creating tailored and result oriented solutions. Some of the recent projects include ERPCorp, Wheelrack , TN Nursery, PROSGlobalinc, Patient9, and many more