Table of Contents

Introduction

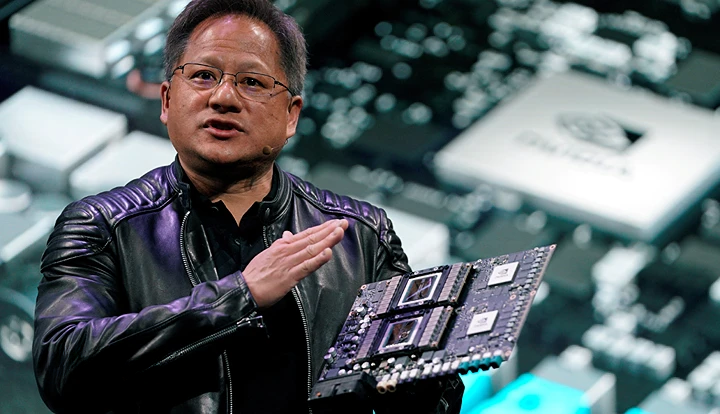

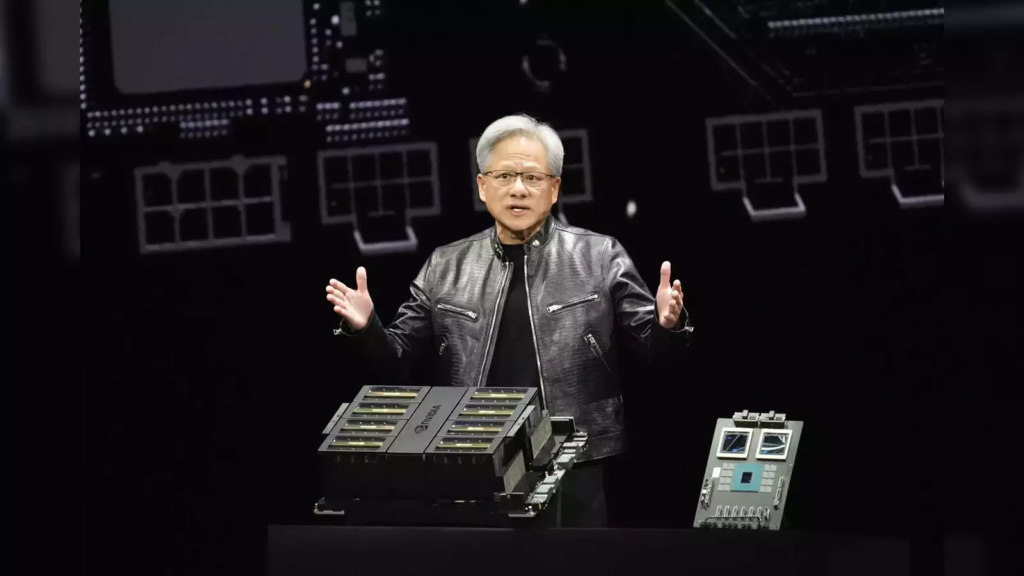

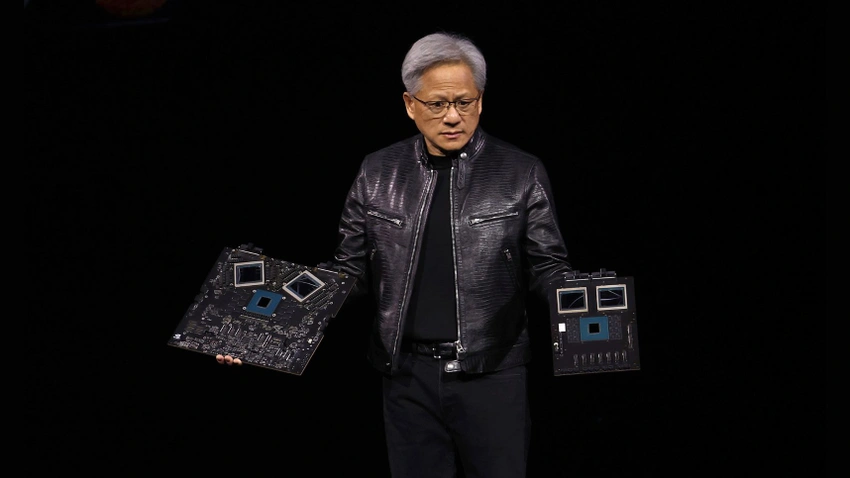

At Nvidia’s 2024 GTC AI conference, the company unveiled the highly anticipated Blackwell platform. This new platform includes a state-of-the-art graphics processing unit (GPU), known as the “world’s most powerful chip,” the GB200 NVL72 rack-scale system, and a suite of enterprise AI tools.

Its introduction marks a significant milestone in AI hardware, potentially enhancing how data centers, cloud computing services, and AI research are conducted, by offering superior speed, scalability, and power efficiency.

Leading cloud service providers are adopting Blackwell to advance generative AI, deep learning, and cloud computing services, showing Nvidia’s commitment to pushing AI technology’s boundaries.

Development of Nvidia Blackwell

The Blackwell GPU architecture incorporates six revolutionary technologies for accelerated computing, catalyzing advancements in data processing across various industries.

Jensen Huang, the CEO of NVIDIA, expressed, “For the past thirty years, we have been dedicated to advancing accelerated computing to drive monumental breakthroughs such as deep learning and AI.

Historical context: Evolution of AI chips leading up to Blackwell.

Early Days (Pre-2010)

- Initially, AI tasks relied heavily on CPUs, limited by lower performance capabilities and higher energy consumption.

- Graphics processing unit (GPU), originally engineered for graphics rendering, were recognized for their potential in parallel processing tasks crucial for AI due to their superior efficiency.

The Rise of GPUs for AI (2010s)

- Nvidia’s introduction of the CUDA (Compute Unified Device Architecture) programming model in 2006 was a game-changer, unlocking GPUs for general-purpose computing and AI applications.

- Groundbreaking GPU architectures such as Tesla (2008) and Fermi (2010) began to offer substantial performance boosts for AI tasks over traditional CPUs.

- The surge in deep learning demanded even more robust computational power, which these new GPUs began to provide.

The Era of Specialized AI Chips (2010s-Present)

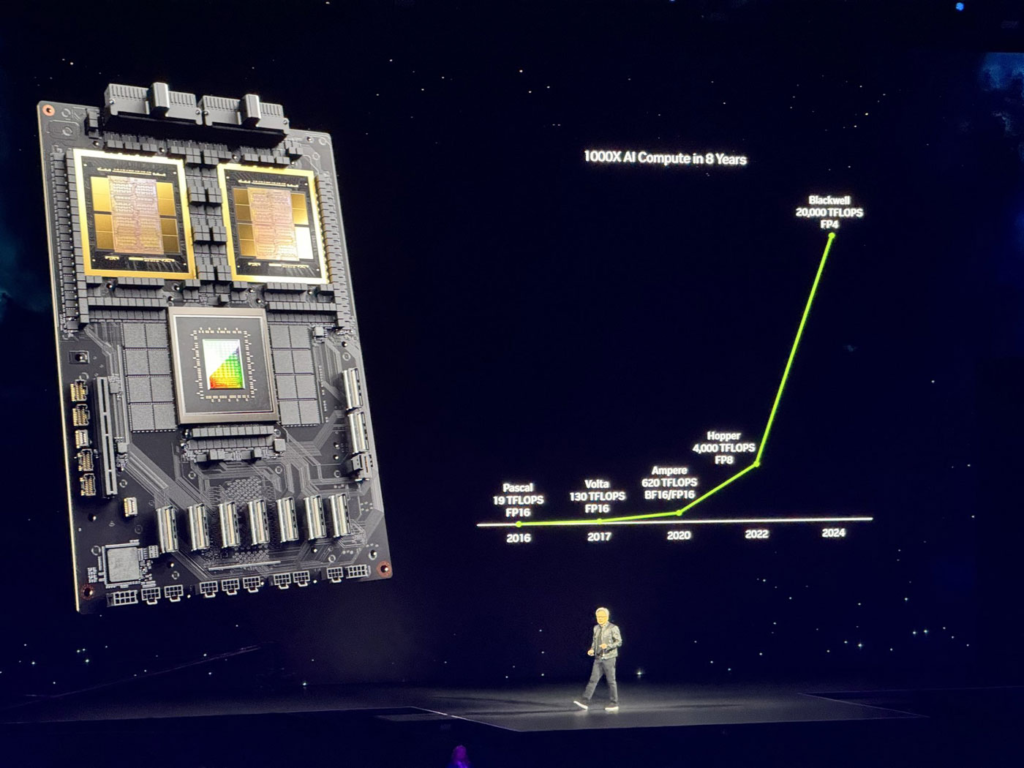

- The introduction of Tensor Cores with Nvidia’s Volta architecture in 2017 marked a significant stride toward optimizing AI workloads, focusing specifically on AI performance.

- Subsequent developments with Turing (2018), Ampere (2020), and Ada Lovelace (2022) architectures continued to enhance AI processing capabilities through improvements in Tensor Cores and memory bandwidth.

- This period also saw increased competition as other tech giants like Intel and AMD introduced their AI-centric chips, enriching the AI technology landscape.

The Leap to Blackwell (2024)

- The Blackwell platform by Nvidia represents a monumental leap forward, emphasizing three critical advancements.

- Generative AI: Facilitating real-time applications that leverage massive language models with trillions of parameters.

- Exascale Computing: Equipping users to address some of the most complex challenges that require exascale computing capabilities, defined as achieving at least one quintillion calculations per second.

- Security: Introducing robust security features designed to protect confidential AI training and inference processes.

Key technological advancements and breakthroughs in chip design.

Blackwell incorporates several groundbreaking advancements, building on a strong legacy of AI chip development:

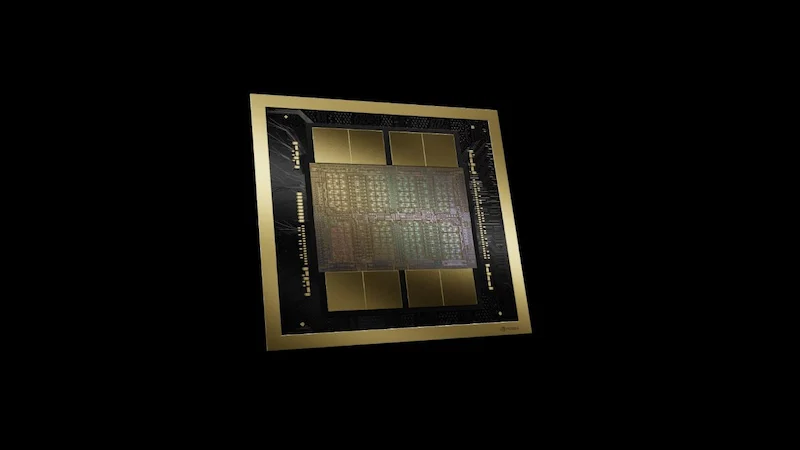

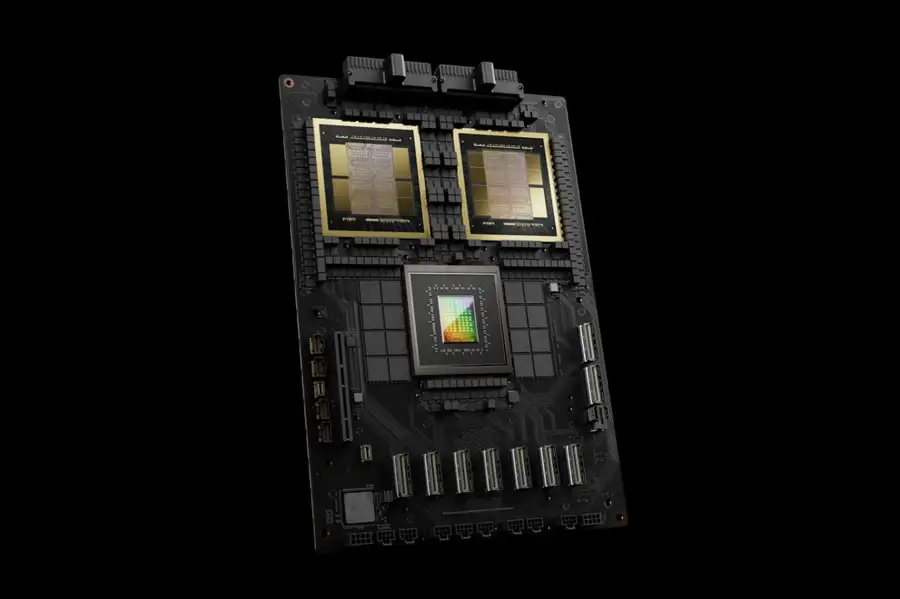

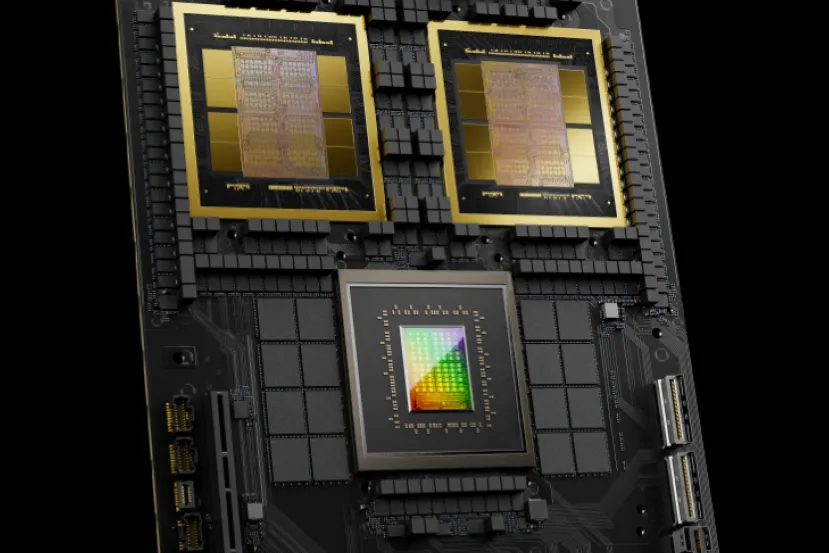

- Dual GPUs: The platform features two GPUs linked by a high-speed connection, functioning together as a unified, exceptionally powerful processor. This configuration enhances processing capabilities and speeds up computational tasks significantly.

- Second-Generation Transformer Engine: Specifically engineered for AI, this enhanced engine boosts the efficiency of both training and deploying complex AI models, particularly large language models (LLMs), making it ideal for handling sophisticated AI tasks.

- Micro-tensor Scaling: This feature optimizes memory usage for cutting-edge models without compromising accuracy, enabling more efficient processing of large-scale AI operations.

- Focus on Efficiency: Blackwell is designed to achieve substantial performance enhancements while consuming less energy than its predecessors, marking a significant step forward in sustainable high-performance computing.

The role of Blackwell in advancing Nvidia’s AI research and development

- Generative AI: With Blackwell, Nvidia enables real-time applications that leverage large language models (LLMs) with capabilities approaching human-like intelligence. This advancement facilitates significant progress in developing more advanced chatbots, virtual assistants, and automated content-generation tools.

- Scientific Computing: Blackwell’s robust processing power accelerates simulations and fosters innovations in critical areas such as pharmaceuticals and materials science. Faster computations mean quicker breakthroughs, potentially revolutionizing these fields.

- Overall Efficiency: Blackwell significantly lowers training and operational costs for AI models, catalyzing faster research and development across diverse industries.

Blackwell represents a substantial advancement in AI chip technology. Amazon Web Services, Dell Technologies, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI are numerous organizations poised to embrace Blackwell.

Technical Specifications

Blackwell boasts a revolutionary architecture, building upon past successes and introducing groundbreaking features. Here’s a closer look:

Detailed Analysis of Blackwell’s Architecture

- Grace Superchip: This core component connects two “Blackwell GPUs” with a CPU via high-speed NVLink. This creates a unified system with immense processing power.

- Second-Gen Transformer Engine: This engine specifically targets the needs of Transformer-based AI models, the foundation for LLMs. It offers significant speedups compared to previous architectures.

- HBM3e Memory: High Bandwidth Memory (HBM) provides exceptional memory bandwidth for handling massive datasets efficiently. The “e” signifies the latest iteration with an even higher bandwidth.

- Micro-tensor Scaling: This technology allows efficient utilization of memory for smaller data types (e.g., 4-bit floating-point) used in some AI models, without compromising accuracy.

- Decompression Engine: Dedicated hardware for on-the-fly decompression of data streams, further improving processing efficiency.

- Reliability, Availability, and Serviceability (RAS) Engine: This built-in engine proactively identifies and mitigates potential hardware issues, minimizing downtime.

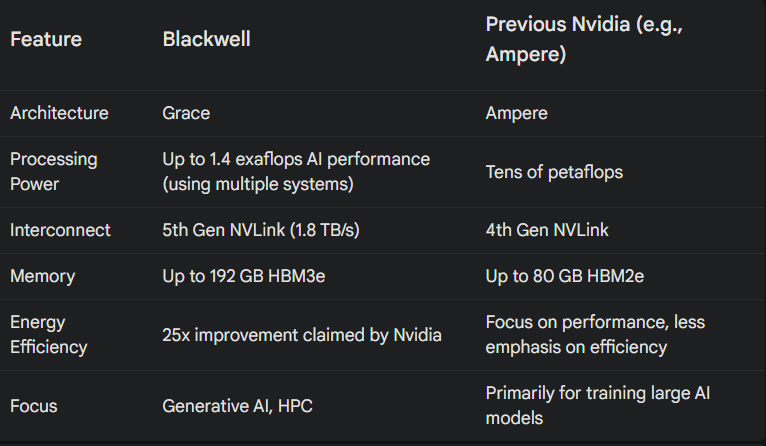

Comparison with Previous Nvidia Chips

Performance Metrics

- Processing Power:Single B100 GPU: Up to 14 petaFLOPS FP16 Tensor Cores. Multi-system configurations achieve exaflop-scale performance.

- Speed: Significant speed improvements compared to previous architectures, especially for Transformer-based models.

- Energy Efficiency: Blackwell claims a 25x improvement in efficiency for similar performance compared to previous generations.

Overall, Blackwell represents a substantial leap in AI chip design. Its architecture caters to the ever-growing demands of complex AI models, particularly in the realm of generative AI and high-performance computing.

Applications and Impact

Blackwell’s capabilities extend far beyond theoretical benchmarks. Here’s a glimpse into its potential applications and impact:

Industry-Specific Use Cases

- Healthcare:

- Drug Discovery: Blackwell can accelerate simulations of molecules and protein interactions, leading to faster development of new drugs.

- Medical Imaging: Real-time analysis of medical scans for faster and more accurate diagnoses.

- Automotive:

- Autonomous Vehicles: Blackwell can power advanced perception systems for real-time obstacle detection and path planning in self-driving cars.

- Predictive Maintenance: Analyzing sensor data from vehicles to predict potential failures and prevent breakdowns.

- Finance:

- Fraud Detection: Real-time analysis of financial transactions to identify fraudulent activity.

- High-Frequency Trading: Blackwell’s processing speed can give traders an edge in analyzing market data and making trading decisions.

- Other Industries:

- Manufacturing: Optimizing production processes by analyzing sensor data and predicting equipment failures.

- Media & Entertainment: Creating hyper-realistic special effects and generating personalized content recommendations.

Advancements in Machine Learning and Deep Learning

- Larger and More Complex Models: Blackwell’s architecture allows researchers to develop and train models with billions or even trillions of parameters, pushing the boundaries of machine learning capabilities.

- Generative AI Revolution: Blackwell’s strengths in this area will accelerate the development of AI that can create realistic text formats, images, and even code, impacting various creative fields.

- Faster Experimentation and Innovation: Blackwell’s efficiency will lead to faster training times and reduced costs, enabling researchers to iterate on ideas more quickly and accelerate breakthroughs in AI research.

Impact on AI Research and Practical Applications

- Democratization of AI: Blackwell’s capabilities, coupled with cloud-based platforms, will make advanced AI tools more accessible to smaller companies and researchers, fostering broader innovation.

- Real-World Applications: Blackwell’s real-time processing abilities will enable the development of practical AI applications that can directly impact people’s lives in various sectors like healthcare, finance, and autonomous vehicles.

- Ethical Considerations: The increasing power of AI raises ethical concerns. The development of powerful AI tools like Blackwell necessitates responsible development and deployment strategies to mitigate potential risks.

Challenges and Limitations of Nvidia Blackwell

While Nvidia’s Blackwell boasts impressive capabilities, it’s not without its challenges and limitations:

Technical Challenges

- Manufacturing Complexity: Packing 208 billion transistors onto a single chip pushes the boundaries of current manufacturing processes. Low yields and high production costs remain potential hurdles.

- Software Optimization: Fully utilizing Blackwell’s architecture will require specialized software tools and adaptations of existing AI frameworks. Optimizing existing code for this new architecture might be a significant undertaking.

Limitations Compared to Competitors

- Focus on Generative AI: While Blackwell excels in generative AI tasks, competitors might offer more balanced performance across various AI workloads. Companies like Intel and AMD are also developing next-generation AI chips, and their offerings might cater to a broader range of AI applications.

- Limited Availability: Initial production limitations are expected, potentially hindering widespread adoption compared to established solutions.

Power Consumption and Heat Generation

- Energy Efficiency: While Nvidia claims significant efficiency improvements, the immense processing power still requires substantial energy. Balancing performance with lower power consumption remains an ongoing challenge.

- Heat Management: Effective cooling solutions are essential to dissipate the heat generated by Blackwell. This might necessitate complex liquid cooling systems, adding to system complexity and cost.

Addressing these challenges will be crucial for the widespread adoption of Blackwell. Advancements in manufacturing processes, software optimization, and cooling technologies will be key to overcoming these limitations.

Future Prospects

The arrival of Blackwell marks a significant leap, but Nvidia’s journey doesn’t end here. Here’s a glimpse into the future of Nvidia’s AI chips and the broader implications:

- Focus on Specialization: Building upon Blackwell’s foundation, Nvidia might develop a family of specialized AI chips. These chips could be tailored for specific tasks like computer vision, natural language processing, or scientific computing, offering even better performance and efficiency.

- Neuromorphic Computing: This emerging field focuses on chips that mimic the human brain’s structure and function. Nvidia might explore integrating neuromorphic elements into future AI chips for tasks that require human-like learning and adaptation.

- Heterogeneous Integration: Combining different processing elements like CPUs, GPUs, and specialized AI accelerators on a single chip could be another avenue for future development. This would allow for more efficient and optimized processing for various AI workloads.

Conclusion

In conclusion, Nvidia’s Blackwell GPU architecture represents a watershed moment in the evolution of AI chips. Its innovative design, groundbreaking features, and focus on generative AI position it as a powerful tool for researchers and developers.

From revolutionizing scientific computing to enabling real-time AI applications, Blackwell’s impact will be felt across various industries. However, technical challenges like manufacturing complexity and heat dissipation need to be addressed for widespread adoption.

As Nvidia continues to push the boundaries of AI chip design, the future holds immense potential for even more specialized, efficient, and accessible AI hardware, shaping the trajectory of AI research and development for years to come.

Deepak Wadhwani has over 20 years experience in software/wireless technologies. He has worked with Fortune 500 companies including Intuit, ESRI, Qualcomm, Sprint, Verizon, Vodafone, Nortel, Microsoft and Oracle in over 60 countries. Deepak has worked on Internet marketing projects in San Diego, Los Angeles, Orange Country, Denver, Nashville, Kansas City, New York, San Francisco and Huntsville. Deepak has been a founder of technology Startups for one of the first Cityguides, yellow pages online and web based enterprise solutions. He is an internet marketing and technology expert & co-founder for a San Diego Internet marketing company.