Table of Contents

Introduction

OpenAI has unveiled a significant update to its popular ChatGPT platform. This update presents GPT-4o, a robust new AI model that brings “GPT-4-class chat” to the application.

Chief Technology Officer Mira Murati and other OpenAI staff showcased their latest flagship model, which can engage in real-time verbal conversations with a friendly AI chatbot that can converse like a human.

The Spring Update highlights GPT-4o’s advancement towards more natural human-computer interaction, showcasing OpenAI’s commitment to making advanced AI more accessible for enhancing productivity and creativity globally.

Overview of GPT-4o ‘omni’ model and its capabilities

OpenAI’s GPT-4o ‘Omni’ model is a major advancement in generative AI, with multimodal capabilities for text, speech, and visual data. The “o” in GPT-4o stands for “omni,” indicating its versatility across various forms of communication and media.

This model not only retains the intelligence level of its predecessor, GPT-4 but also extends its capabilities further, making it a powerful tool in AI-driven applications.

Multimodal Abilities

The primary highlight of GPT-4o is its ability to process and generate content across three main modalities:

- Text: GPT-4o can comprehend and produce text at the same level of skill as its predecessors, but it has been enhanced to produce more coherent and contextually aware outputs.

- Speech: Unlike GPT-4 Turbo, GPT-4o integrates speech processing, enabling it to understand spoken language and respond in real-time. This includes recognizing nuances in the user’s voice and generating responses in various emotive styles, even singing.

- Vision: GPT-4o can analyze visual data, allowing it to interpret images and videos. It can respond to queries about visual content, such as identifying objects in a photo or explaining software code displayed on a screen.

Enhanced Interaction and Responsiveness

- GPT-4o is designed to support a more interactive user experience. It offers real-time responsiveness, allowing users to interrupt the AI while it is responding and the model to adjust its reply dynamically.

- This feature simulates a more natural conversational flow, akin to human-like interactions.

Application in Consumer and Developer Products

- The model is set to be rolled out iteratively across OpenAI’s developer and consumer-facing products.

- This strategic deployment aims to integrate GPT-4o’s advanced capabilities into various applications, enhancing both the functionality and user experience of OpenAI’s offerings.

Improved Performance and Accessibility

- GPT-4o boasts significant improvements in processing speed and efficiency compared to its predecessors.

- It is also more multilingual, with enhanced performance in approximately 50 languages, making it more accessible to a global user base.

- Additionally, it offers increased cost-effectiveness and higher rate limits, particularly in integrated services like Microsoft’s Azure OpenAI Service.

Key Insights into GPT-4o: Highlighting Its Features

Before the development of GPT-4o, it was possible to utilize Voice Mode to communicate with ChatGPT, resulting in average latencies of 2.8 seconds for GPT-3.5 and 5.4 seconds for GPT-4.

- Voice Mode functioned as a pipeline comprising three distinct models: an initial model transcribed audio to text, which was then processed by GPT-3.5 or GPT-4 to generate text, and finally, a simple model converted the text back into audio.

- However, this method led to a loss of information for the primary source of intelligence, GPT-4, as it was unable to directly capture tone, multiple speakers, or background noises, and was incapable of producing laughter, singing, or conveying emotion.

- With the introduction of GPT-4o, a single new model was trained end-to-end across text, vision, and audio, enabling all inputs and outputs to be processed by the same neural network.

- As GPT-4o represents the first model to combine these modalities, the exploration of its capabilities and limitations is still in its early stages.

GPT-4o Access: Step-by-Step Instructions for New Users

OpenAI announced that the GPT-4o model will soon be accessible to all users of ChatGPT, including both free and paid users. While it is currently being introduced to paid users, free users will also have access to the GPT-4o model on ChatGPT in the coming weeks. The process will remain unchanged.

To use ChatGPT-4o on web

OpenAI announced that the GPT-4o model will be available to all users on ChatGPT, including free and paid users. Initially, it’s rolling out to paid users, but free users will also gain access over the next few weeks.

- Head over to chatgpt.com and log in using your account details.

- After that, select “GPT-4o” from the drop-down menu located in the top-left corner.

- You can now begin using ChatGPT 4o.

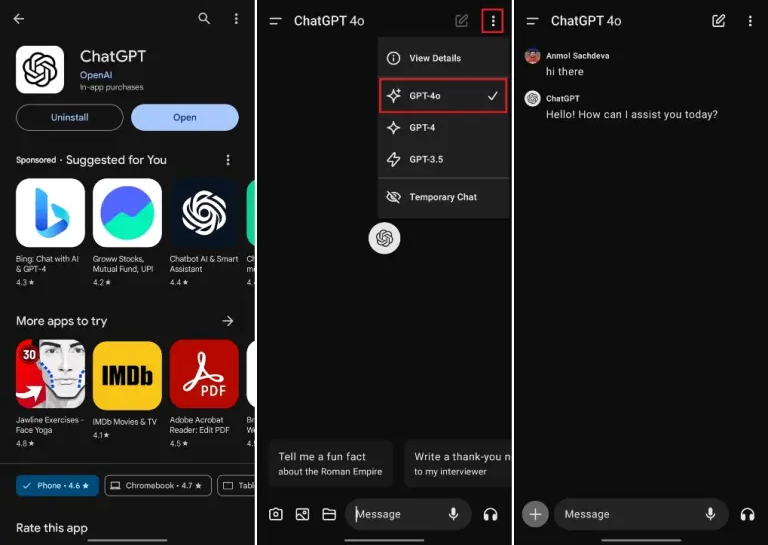

To use ChatGPT-4o on Android and iOS

Once again, early access to the GPT-4o model on Android and iOS is being granted to ChatGPT Plus users. Free ChatGPT users will also receive access in the upcoming weeks.

- Feel free to download the ChatGPT app on your smartphone, whether you’re using Android or iOS.

- Then, log in to your account.

- After that, click on the 3-dot menu located in the top-right corner, and select “GPT-4o”.

- You can now begin interacting with OpenAI’s newest Omni model. Additionally, it offers support for the latest Voice Mode chat. However, interruptions are not yet supported on Android.

To use ChatGPT-4o on OpenAI Playground

Although OpenAI has not yet made the GPT-4o model available for free on ChatGPT, you can access it through the OpenAI Playground. The most recent model is available even for users who do not pay. Remember that the Playground is primarily for developers, but it’s also open for general users to try out the latest models.

- Open your browser and visit the OpenAI Playground. Log in using your account credentials.

- Then, select the “GPT-4o” model from the drop-down menu in the top-left corner.

- You can now begin testing the model for free by sending instructions.

Essential Points on GPT-4o Accessibility

The latest advancement, GPT-4o, represents a significant leap forward in the realm of deep learning, with a focus on practical usability. Extensive efforts have been made over the past two years to enhance efficiency across all levels of the system.

As a result of this research, GPT-4o is being made available to a wider audience, with its capabilities to be gradually introduced, including extended red team access.

- GPT-4o is now available to free-tier users with specific usage limits. Once a user surpasses their message cap, GPT-4o will automatically transition to GPT-3.5, ensuring uninterrupted conversations.

- Plus subscribers benefit from up to 5x more messages with GPT-4o compared to free-tier users.

- Team and Enterprise customers will benefit from increased usage limits, which will make GPT-4o an invaluable resource for group projects.

- The text and image capabilities of GPT-4o are beginning to be integrated into ChatGPT, with availability in the free tier and for Plus users, offering up to 5 times higher message limits.

- Additionally, an alpha version of Voice Mode with GPT-4o will be introduced within ChatGPT Plus shortly.

- Furthermore, developers will have access to GPT-4o in the API as a text and vision model, offering double the speed, half the cost, and 5 times higher rate limits in comparison to GPT-44 Turbo.

Plans are also underway to introduce support for GPT-4o’s new audio and video capabilities to a select group of trusted partners in the API in the coming weeks.

Comparative Analysis of ChatGPT-4o with Other AI Models

ChatGPT-4o, also known as the ‘Omni’ model, is distinct due to its multimodal capabilities across text, speech, and vision. This analysis compares ChatGPT-4o to other AI models, showcasing its advancements.

GPT-4o vs. GPT-4 Turbo

- Capabilities: While GPT-4 Turbo was primarily trained on a combination of images and text, capable of tasks like extracting text from images or describing their content, GPT-4o adds speech to its repertoire.

- User Interaction: GPT-4o supports real-time responsiveness, allowing users to interact more dynamically by interrupting and resuming conversations. This is a step beyond GPT-4 Turbo’s more static interaction model.

- Application Range: Both models serve broad application scenarios, but GPT-4o’s speech-handling capabilities allow for a wider range of applications, such as real-time translation, adaptive learning environments, and more immersive interactive experiences.

GPT-4o vs. GPT-3.5

- Intelligence Level: GPT-4o operates at a “GPT-4-level” intelligence but extends this with the ability to reason across multiple modalities, not present in GPT-3.5, which focuses solely on text.

- Multilingual Capabilities: GPT-4o enhances performance across roughly 50 languages, which is a significant improvement over GPT-3.5, particularly in terms of nuanced understanding and generation in non-English languages.

- Cost and Efficiency: GPT-4o is described as being more cost-effective and faster than previous models, including GPT-3.5, which aligns with OpenAI’s goals of making AI more accessible and efficient.

Conclusion

OpenAI’s GPT-4o ‘Omni’ marks a transformative leap in AI, enabling seamless interaction between humans and machines through text, speech, and vision. Its real-time responsiveness and nuanced understanding of human input enhance its utility in various applications.

The strategic rollout underscores OpenAI’s commitment to responsible AI deployment. GPT-4o represents a significant advancement in AI and a cornerstone for future developments, promising a future where AI and human interactions are more intuitive and productive.

Faisal Rafeeq is an SEO, PPC, and Digital Marketing expert. Faisal has worked on multiple e-commerce and web development projects, creating tailored and result oriented solutions. Some of the recent projects include ERPCorp, Wheelrack , TN Nursery, PROSGlobalinc, Patient9, and many more