Table of Contents

Introduction

Physical AI is the perfect blend of cognitive power and physical capability in machines—AI that goes beyond thinking to taking purposeful, real-world action. It doesn’t just process data; it observes, navigates, adapts, and responds to its environment in real time with precision and intent.

Al won’t take your job, the person who uses Al will take your job ~Jensen Huang

Algorithms are no longer confined to servers and screens. They’re now embodied in mechanical limbs, flexible sensors, and adaptive motors, creating machines that physically evolve based on the data they consume.

In this blog post, we’ll dive deeper into how Physical AI works, explore its key components, and uncover the ways it is reshaping industries and redefining the future of intelligent machines.

What is Physical AI?

Physical AI enables autonomous systems—such as robots, self-driving vehicles, and intelligent environments—to perceive, interpret, and execute complex tasks in the real world. Often called “generative physical AI,” it’s recognized for its capability to generate both insights and actions based on real-time interactions.

- It’s a big step forward because it builds advanced decision-making right into devices that can adjust to their surroundings.

- Unlike older robots that only follow set instructions, physical AI uses real-time data and learning methods like neural networks, reinforcement learning, and adaptive algorithms to understand inputs and respond flexibly.

- This approach lets systems predict changes, improve over time, and adapt to different environments, making them more efficient and versatile.

Features of Physical AI

- Autonomy: Physical AI systems think and act on their own—making decisions based on their surroundings without needing constant human guidance.

- Real-Time Perception: They quickly gather and analyze sensor data, enabling fast, responsive actions in ever-changing environments.

- Adaptability: By interacting with the world around them, these systems continuously learn and improve, adapting to new tasks and conditions with ease.

- Sensory Data Integration: They combine inputs from multiple sensors—visual, tactile, and environmental—to build a full picture of their environment and respond intelligently.

- Physical Interaction: Designed to operate in the real world, Physical AI systems can handle objects, move through spaces, and perform hands-on tasks with precision.

How Does Physical AI Work?

Physical AI operates through a cycle of perception, decision-making, and action:

- Perception: Real data from the environment is obtained utilizing sensors.

- Processing: Dataflow feeds data into AI algorithms so that they can perform further processes like insights or patterns.

- Decision-Making: The present design employs machine learning and deep learning to determine the best action.

- Action: Agents perform operations and can reassess the temporal circumstances of a given problem.

By continually integrating and processing the required information, Physical AI systems adjust and develop more efficacious and self-governing

Key Components of Physical AI

Sensors and Actuators

Sensors and actuators are the most important components of physical AI, seamlessly bridging digital commands and tangible actions.

- Technologies like LIDAR enable robots to perceive and navigate their surroundings by capturing 3D signals far quicker than human capability.

- This high level of accuracy is crucial for ensuring that robots interact safely and efficiently with complex, real-world environments, laying the groundwork for innovations such as autonomous vehicles and robotic surgery.

Control Systems

Control systems are vital for orchestrating the intricate operations of physical AI devices. They manage the continuous flow of data among sensors, processors, and actuators, ensuring smooth, synchronized performance.

- By leveraging advanced algorithms, control systems empower machines to execute precise, adaptive actions—whether it’s a robotic arm delicately assembling components or a drone maneuvering through unpredictable terrain.

- This coordination significantly enhances both the efficiency and reliability of physical AI technology.

AI Algorithms

AI algorithms serve as the intelligent core of physical AI, enabling machines to interpret data, make decisions, and learn from experience.

- Incorporating methods from machine learning, neural networks, and reinforcement learning, these algorithms convert sensor inputs into optimized actions in real-time.

- In autonomous systems, for instance, AI algorithms process vast amounts of environmental data to forecast obstacles and adjust behaviors accordingly.

- Their evolving nature ensures that physical AI systems remain adaptable and capable of tackling increasingly sophisticated tasks.

Technological Foundations of Physical AI

Reinforcement Learning (RL)

Reinforcement Learning (RL) powers machines to learn through interaction, enabling adaptation to new environments without human input.

- Learning via Trial and Error: Machines explore actions, receive rewards or penalties, and refine strategies to maximize success.

- Simulation Training: Robots trained in high-fidelity virtual environments can transfer skills to real-world tasks with minimal recalibration.

How RL Works:

- An agent (robot) performs actions in an environment.

- Feedback (rewards/penalties) guides behavior.

- Over time, the agent learns strategies that maximize cumulative rewards.

Environments & Digital Twins

Simulation environments and digital twins accelerate Physical AI development by offering risk-free, data-rich training grounds.

Digital Twins:

- Virtual replicas of robots or systems

- Reflect real-time behavior and performance

- Enable constant testing, monitoring, and optimization

Benefits:

- Risk-Free Experimentation: Train and test safely in virtual settings

- Predictive Maintenance: Spot issues before failure occurs

- Continuous Optimization: Real-time data drives performance improvements

Robotics + AI: The Backbone of Physical AI

The synergy of robotics and AI defines Physical AI’s capabilities, evolving from rigid machines to adaptive, bio-inspired systems.

Key Robotics Innovations:

- Industrial Robots: High precision, repetitive task handling

- Service Robots: Support roles in healthcare, logistics, and retail

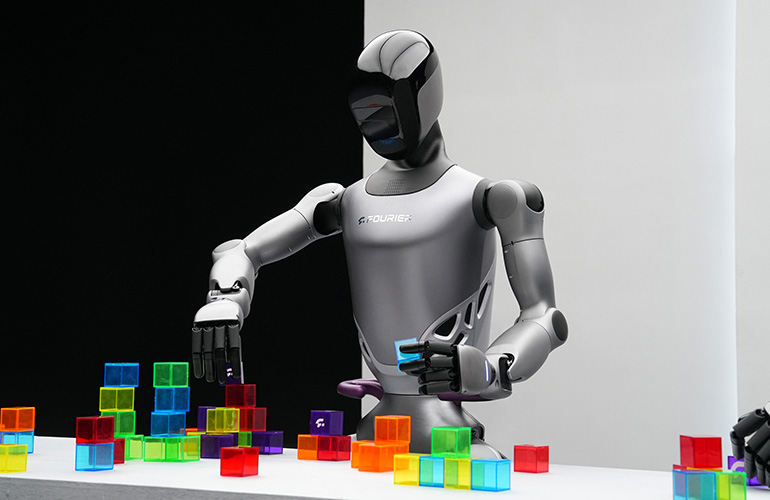

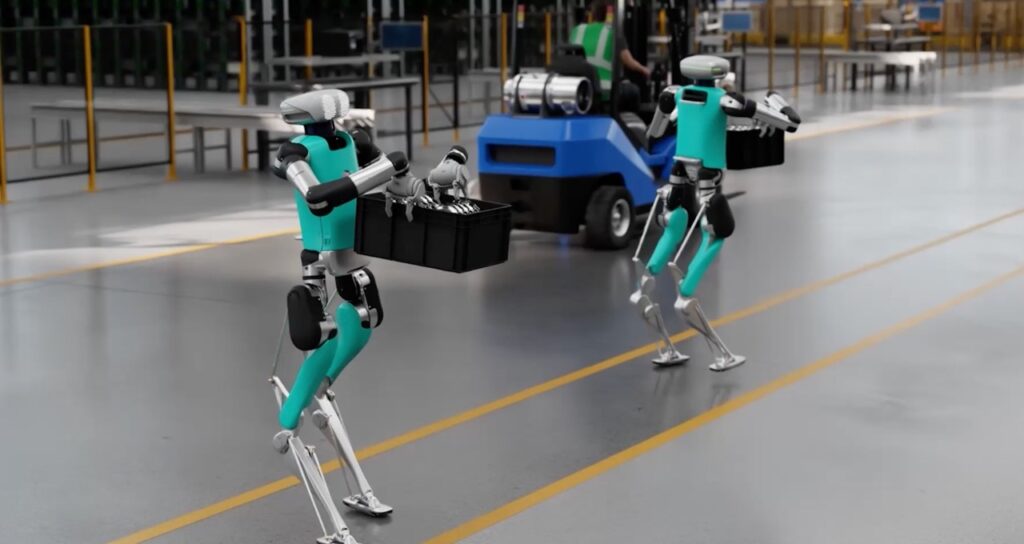

- Humanoid Robots: Natural human-robot interaction via mobility and perception

- Soft Robots: Flexible materials mimic biological movements, ideal for delicate or adaptive tasks

Bio-Hybrid Robotics:

- Bio-Actuated Movement: Uses living tissues to power motion

- Neural Interface Prosthetics: Prosthetics respond to brain signals

- Biohybrid Sensors: Detect environmental changes more naturally

The convergence of robotics, bioengineering, and AI is poised to redefine how machines interact with the physical world.

Case Studies and Applications of Physical AI

Physical AI, which integrates artificial intelligence into robots and other physical systems, is being leveraged by various companies to transform industries such as warehousing, logistics, and manufacturing.

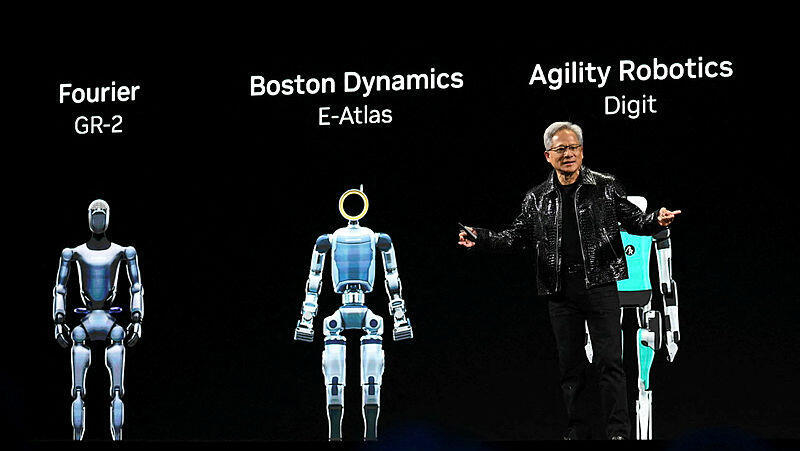

Nvidia

Nvidia’s Omniverse platform enables the creation of highly accurate digital twins of physical environments. By digitizing warehouse information, companies can simulate and optimize operations, leading to increased efficiency and reduced costs.

This technology allows for real-time collaboration and testing of various scenarios without disrupting actual operations, providing a significant advantage in warehouse management.

NVIDIA’s “three computers” approach forms the foundation of their physical AI strategy. The first computer trains robot brains using a combination of real and synthetic data. The second, simulation computer — powered by Omniverse — teaches robots to operate in virtualized environments. The third deploys these algorithms in the physical world.

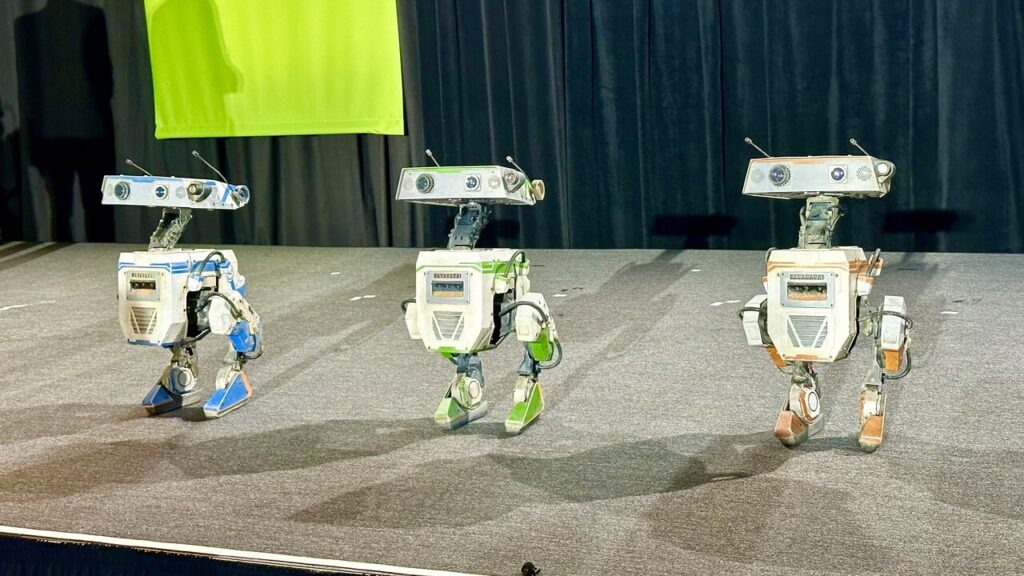

At the Nvidia GTC 2025 keynote, Nvidia, alongside Google DeepMind and Disney Research, unveiled Blue—a robot inspired by Star Wars and powered by AI.

The robot leverages Newton, a newly developed open-source physics engine created by the trio for real-time robotic simulation. Nvidia CEO Jensen Huang demonstrated Blue’s abilities on stage, underscoring its promise for enhancing robot training in the future.

NVIDIA has developed concrete products for each stage, from the GR00T N1 foundation model for robots to the Omniverse Mega blueprint for fleet simulation to the DRIVE AGX in-vehicle computer for autonomous vehicles.

Kawasaki

Kawasaki is advancing physical AI by integrating sophisticated sensors and AI algorithms into both mobility and industrial solutions.

- Their concept project, CORLEO, is a hydrogen-powered, four-legged robotic “horse” designed to adapt its balance and movement in real time based on a rider’s input, enabling safe, all-terrain navigation.

- This innovation, showcased at the Osaka-Kansai Expo 2025, features adaptive rubber hooves, a dashboard for monitoring key parameters, and a night-vision projector.

In manufacturing, Kawasaki embeds physical AI in industrial robots to enhance precision and efficiency.

- By using sensors to process real-time data, these robots autonomously adapt to tasks such as welding, assembly, and grinding.

- Additionally, systems like “Successor” employ remote instruction and on-the-job learning to transfer expert human skills to robots, addressing workforce shortages and complex production challenges.

Mitsubishi

Mitsubishi Electric has launched its MELFA RH-10CRH and RH-20CRH SCARA robots, providing manufacturers with greater flexibility in adopting digital manufacturing while addressing skilled workforce shortages.

These new robots enhance industrial automation through high-speed operation, easy installation, and exceptional efficiency. Compact and lightweight, they are ideal for manufacturers aiming to boost productivity while navigating space and weight constraints.

Accenture

In collaboration with Schaeffler AG, Accenture is working to reinvent industrial automation by integrating physical AI and robotics. Utilizing technologies from Nvidia and Microsoft, they aim to optimize various work scenarios, including human-robot collaboration and full automation. This partnership focuses on creating digital twins to simulate and enhance manufacturing and distribution environments, ultimately improving productivity and efficiency.

KION Group

KION leverages physical AI to digitize warehouse information, creating accurate digital twins on Nvidia’s Omniverse platform. This approach allows for enhanced simulation and optimization of warehouse operations. By integrating AI-powered robots and digital twins, KION aims to make supply chains smarter, faster, and more adaptable to changing conditions.

GXO Logistics

GXO is piloting humanoid robots from companies like Agility Robotics, Apptronik, and Reflex Robotics in its warehouses. These robots perform tasks such as moving containers and recycling materials, aiming to enhance automation in logistics operations. The trials are part of an incubator program that allows GXO to provide real-world feedback to developers, fostering innovation in warehouse automation.

Covariant

Founded in 2017, Covariant focuses on AI-driven robotics technology, enabling robots to perform various tasks in warehouses, such as picking and sorting items. Their technology allows robots to learn manipulation tasks through deep learning and reinforcement learning, with each robot learning from millions of picks performed by connected robots in warehouses around the world.

Applications of Physical AI

- Manufacturing: Physical AI powers robots that adapt in real time to production changes, improving efficiency, reducing errors, and enhancing quality control through AI-driven vision systems.

- Healthcare: AI-enabled surgical robots and wearable devices support high-precision operations and continuous health monitoring, improving diagnostics and treatment outcomes.

- Transportation: Autonomous vehicles and AI-powered traffic systems enhance road safety, reduce accidents, and optimize traffic flow for more efficient and eco-friendly transport.

- Agriculture: AI drones and smart farming machinery help monitor crops, automate tasks, and minimize waste—boosting yields while supporting sustainable practices.

- Home and Personal Use: Smart home devices, like cleaning robots with AI navigation, offer hands-free convenience and efficiency, reshaping home life and personal routines.

Future of Physical AI

- Advancements in Technology: The future of physical AI relies on breakthroughs in 5G, quantum computing, and new materials that boost data speed, computing power, and device versatility.

- Integration with Emerging Technologies: Merging physical AI with blockchain, bioengineering, and renewable energy opens up innovations like secure autonomous systems, advanced prosthetics, and smarter cities.

- Industry Transformation: Physical AI will revolutionize sectors by enabling smarter manufacturing, integrated transportation systems, and more efficient, eco-friendly agriculture.

- Ethical and Regulatory Frameworks: As the technology evolves, developing fair, transparent, and accountable guidelines will be key to building public trust and ensuring responsible adoption.

Conclusion

Physical AI represents a groundbreaking evolution in technological innovation, poised to redefine how industries operate, and individuals engage with technology. Its capacity to merge intelligent algorithms with physical systems opens unprecedented possibilities, fostering smarter solutions across healthcare, manufacturing, transportation, and beyond.

However, achieving its full potential requires addressing the inherent challenges, including technical limitations, ethical concerns, and cost barriers. As we look ahead, the synergy between physical AI and emerging technologies like 5G, quantum computing, and renewable energy will drive the next wave of innovation.

Deepak Wadhwani has over 20 years experience in software/wireless technologies. He has worked with Fortune 500 companies including Intuit, ESRI, Qualcomm, Sprint, Verizon, Vodafone, Nortel, Microsoft and Oracle in over 60 countries. Deepak has worked on Internet marketing projects in San Diego, Los Angeles, Orange Country, Denver, Nashville, Kansas City, New York, San Francisco and Huntsville. Deepak has been a founder of technology Startups for one of the first Cityguides, yellow pages online and web based enterprise solutions. He is an internet marketing and technology expert & co-founder for a San Diego Internet marketing company.